🎨 ReStyle3D

Scene-level Appearance Transfer with Semantic Correspondences

SIGGRAPH 2025

Gordon Wetzstein1 Naji Khosravan3 Iro Armeni1

1Stanford University 2NVIDIA Research 3Zillow Group

*Equal contribution

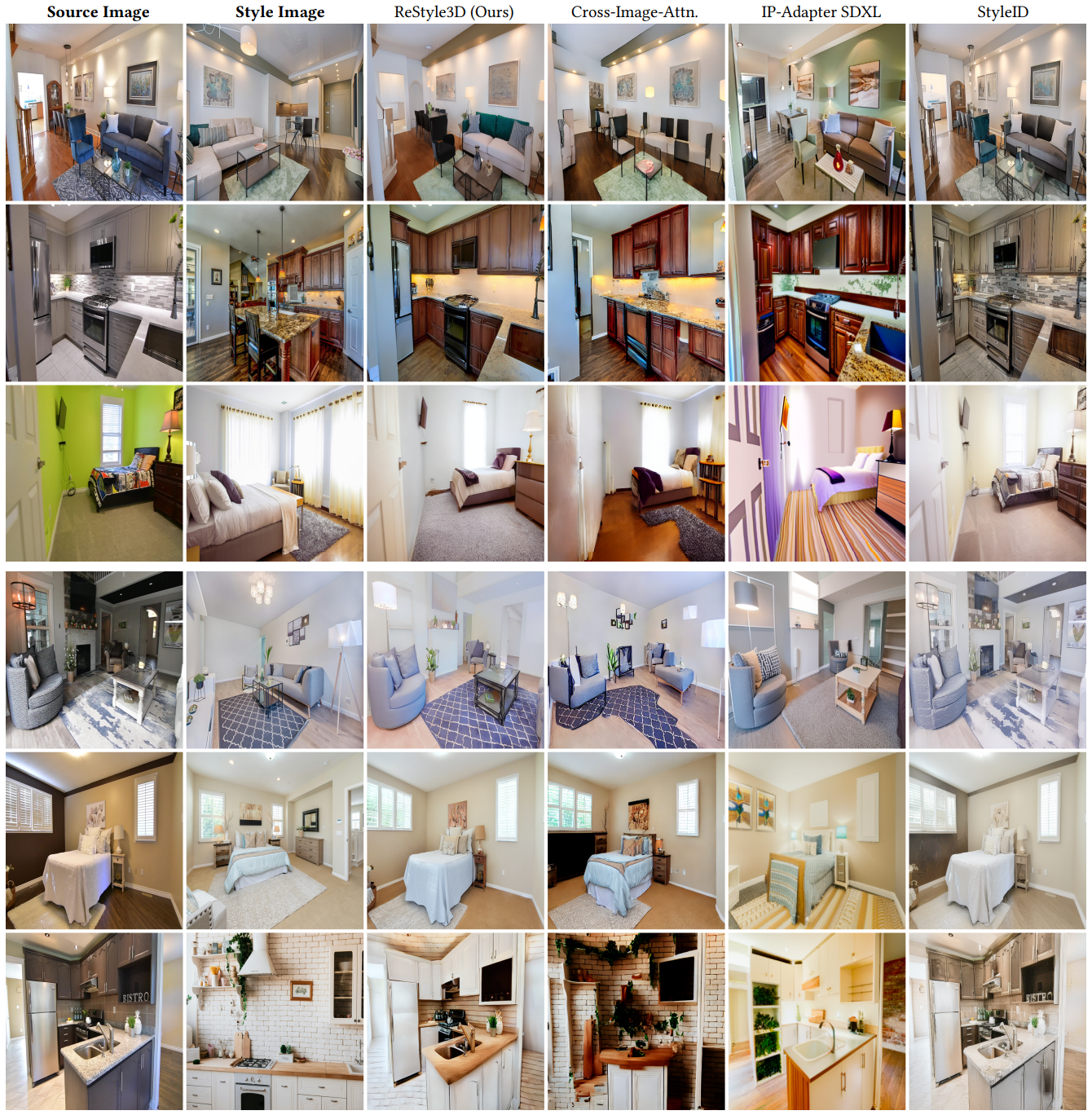

We introduce ReStyle3D, a novel framework for scene-level appearance transfer from a single style image to a real-world scene represented by multiple views. The method combines explicit semantic correspondences with multi-view consistency to achieve precise and coherent stylization. Unlike conventional stylization methods that apply a reference style globally, ReStyle3D uses open-vocabulary segmentation to establish dense, instancelevel correspondences between the style and real-world images. This ensures that each object is stylized with semantically matched textures. ReStyle3D first transfers the style to a single view using a training-free semanticattention mechanism in a diffusion model. It then lifts the stylization to additional views via a learned warp-and-refine network guided by monocular depth and pixel-wise correspondences. Experiments show that ReStyle3D consistently outperforms prior methods in structure preservation, perceptual style similarity, and multi-view coherence. User studies further validate its ability to produce photo-realistic, semantically faithful results. Our code, pretrained models, and dataset will be publicly released, to support new applications in interior design, virtual staging, and 3D-consistent stylization.

Stage 1: Semantic Appearance Transfer

Stage 2: Multi-view Stylization

Two-stage approach of ReStyle3D Pipeline. The style and source images are first noised back to step 𝑇 using DDPM inversion [2024]. During the generation of the stylized output, the extended self-attention layer transfers style information from the style to the output latent. This process is further guided by a semantic matching mask, which allows for precise control. Stereo correspondences are extracted from the original image pair and used to warp the stylized image to the second image. To address missing pixels from warping, we train a warp-and-refine model to complete the stylized image. This model is applied across multiple views within our auto-regressive framework.

@inproceedings{zhu2025_restyle3d,

author = {Liyuan Zhu and Shengqu Cai and Shengyu Huang and Gordon Wetzstein and Naji Khosravan and Iro Armeni},

title = {Scene-level Appearance Transfer with Semantic Correspondences},

booktitle = {ACM SIGGRAPH 2025 Conference Papers},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

year = {2025},

url = {https://doi.org/10.1145/3721238.3730655},

doi = {10.1145/3721238.3730655},

}